MVP development is a spectrum. Our appetite for rejection and character dictates which approach we take.

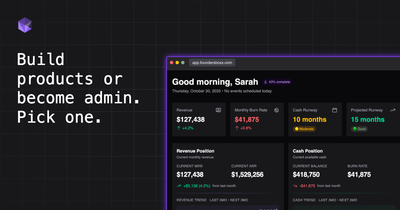

In one corner we have the scrappy founder. Guns blazing and ready to eat raw feedback. MVP is truly minimal. It's been scoped down to the bone, tested for technical functionality, and shipped. Cue the crickets. The product works. It does exactly what they designed. Nobody adopts it.

In the other corner we have the heavyweight builder. Mr. "one more feature and it'll be ready," "let me just refactor this." Another feature, another refactor, another improvement is always there. Months pass. The product never ships.

We know the Lean Startup principles. Everyone's read Eric Ries. We all nod along when someone mentions "build-measure-learn." But how many of us actually try to understand the three steps? They're not isolated—they feed one another.

Both failures stem from the same delusion: believing the battle is about features when it's actually only about changing behaviour. You can achieve this with one feature or a hundred.

The lies that come in opposite flavours

The problem isn't that we don't know how MVPs work. We can recite the definition. We've heard the advice. We often ignore it when it applies to us.

The early shipper's lie: "We'll add features later based on feedback"

Translation: We're shipping something we know isn't complete, betting that customers will tell us what's missing.

The reality: Customers don't give feedback on products they abandon in the first session. We get one shot at first impression. If our MVP doesn't deliver immediate value, we don't get to iterate—we just lose that customer.

The late shipper's lie: "I want to launch with something truly viable, not half-baked"

Translation: We're building more features to avoid discovering whether customers want any of this.

The reality: As long as the product isn't shipped, it can't fail. Every additional feature delays the moment when real customers might say "this isn't what I need." We're using "building a better MVP" as protection from rejection.

One founder is fighting to ship minimal features. The other is fighting to ship complete features. Neither is fighting to understand customer behavior.

Neither understands that "viable" means customers choose a solution over their current behavior. Our MVP isn't competing against other products. It's competing against customers doing nothing. Most people's default choice is to keep their current behaviour, even if it's painful.

What actually happens during "build-measure-learn"

The theory: We build something minimal, measure how customers use it, learn from their behavior, and iterate.

What the early shipper does: Build something, measure that people aren't using it, then build more features without understanding why the first version failed. The "learn" step disappears. Usage data goes down, and they interpret this as "we need more features" instead of "we built something nobody wants."

We can watch the early shipper fail in real time. They launch an MVP. Week one traffic spikes, week two it drops. By week three, they're already building the next feature set. Nobody stops to ask why 90% of people who tried it never came back.

What the late shipper does: Build something, imagine how customers might use it, then build more features based on those imagined scenarios. The "measure" and "learn" steps never happen. Customer conversations become increasingly hypothetical because there's nothing to show yet.

We can watch the late shipper fail in slow motion. Month one they're finalizing core features. Month two they're adding "essential" improvements. Month three they're refactoring for future features. The product gets more sophisticated. The launch date keeps moving.

The meta pattern we miss

Here's what nobody talks about: We ourselves go through the build-measure-learn framework as founders.

Our first MVP is the "build" phase. We're testing our hypothesis about what makes a product viable.

The market response is the "measure" phase. Customers either change their behaviour or they don't.

Recognising why it failed is the "learn" phase. This is where most of us get stuck. We measure the failure but don't learn from it.

The irony: We expect customers to adopt our product immediately, but we often need multiple attempts to internalise the same frameworks we're trying to apply. Our second MVP attempt mirrors the framework much closer. We actually internalise suggestions and feedback after experiencing the cost of ignoring them.

Frameworks are a hurdle race. Knowing them and applying them when your own money and reputation are on the line are completely different activities. Framework knowledge exists in a calm, rational space. Building an MVP happens in chaos, time pressure, and fear.

Failure teaches what frameworks can't. After the first failed attempt, we recognise patterns. The advice was always there. We just weren't ready to hear it until we'd paid for ignoring it.

The brutal truth about viability

"Viable" means customers choose our solution over their current behavior. Not that it works. Not that it's technically impressive. Not that it has all the features we think it needs.

Most MVPs fail because we define viability from our own perspective, not the customer's. "It does X" feels viable when you built X. "It solves my problem better than my current solution" is viable from the customer's perspective.

The early shipper discovers this gap after launch. The late shipper never discovers it because they never launch. Both are protecting themselves from the same truth: We might have built something nobody wants.

What separation of knowledge and execution actually costs

The advice hasn't failed. We have access to decades of validated frameworks about MVP development. The failure rate stays high because having information and applying it are different problems.

We can tell ourselves exactly what will happen if we skip customer validation. Do it in front of a mirror. We'll nod, agree completely, and then either:

- Skip customer validation because we're in a hurry and we're pretty sure our case is different

- Do extensive customer validation but never ship because we're terrified the feedback wasn't comprehensive enough

This gap, between what we know and what we do, explains why the same MVP mistakes repeat across thousands of companies despite universal recognition of the same startup advice.

The frameworks aren't wrong. The failure mode is thinking we're fighting to build the right number of features when we're actually fighting to change deeply ingrained customer behaviour.

We already know what makes an MVP viable. The question is whether and when we recognise we're also going through build-measure-learn as a founder and whether we actually learn from our previous attempts instead of just measuring their failure.